AN ANTHROPOCENTRIC DESCRIPTION SCHEME FOR MOVIES CONTENT CLASSIFICATION AND INDEXING

AN ANTHROPOCENTRIC DESCRIPTION SCHEME FOR MOVIES CONTENT

CLASSIFICATION AND INDEXING

N.Vretos,V.Solachidis and I.Pitas

Department of Informatics

University of Thessaloniki,54124,Thessaloniki,Greece

phone:+30-2310996304,fax:+30-2310996304

email:vretos,vasilis,pitas@aiia.csd.auth.gr

ABSTRACT

MPEG-7has emerged as the standard for multimedia data content description.As it is in its early age,it tries to evolve towards a direction in which semantic content description can be implemented.In this paper we provide a number of classes to extend the MPEG-7standard so that it can handle the video media data,in a more uniform way.Many descrip-tors(Ds)and description schemes(DSs)already provided by the MPEG-7standard,can help to implement semantics of a media.However,by grouping together several MPEG-7 classes and adding new Ds,better results in movie produc-tion and analysis tasks can be produced.Several classes are proposed in this context and we show that the corresponding scheme produces a new pro?le which is more?exible in all types of applications as they are described in[1].

1.INTRODUCTION

Digital video is the most essential media nowadays.It is used in many multimedia applications such as communication,en-tertainment,education etc.It is very easy to conclude that video data increase exponentially with time and researchers are focusing on?nding better ways in classi?cation and re-trieval applications for video databases.The way of con-structing videos has also changed in the last years.The po-tential of digital videos gives producers better editing tools for a?lm production.Several applications have been pro-posed that help producers in doing modern?lm types and manipulate all the?lm data faster and more accurately.For a better manipulation of all the above,MPEG-7standard-izes the set of Ds,DSs,the description de?nition language (DDL)and the description encoding[2]-[6].Despite its early age,many researchers have proposed several Ds and DSs to improve MPEG-7performance in terms of semantic content description[7],[8].

In this paper we will try to develop several Ds and DSs that will describe digital video content in a better and more sophisticated way.Our efforts originate from the idea that a movie entity is a part of several objects prior to and past to the editing process.We incorporate information provided by the preproduction process to improve semantic media content description.This paper is organized as follows.In Section2, we describe the Ds and DSs.In Section3,we give some ex-amples of use of real data.Finally in Section4,a conclusion and future work directions are described.

2.MPEG-7VIDEO DESCRIPTORS

In the MPEG-7standard several descriptors are de?ned which enable the implementation of video content descrip-tions.Moreover MPEG-7must provide mechanisms that can

Class Name Characterization

Movie Class Container Class

Version Class Container Class

Scene Class Container Class

Shot Class Container Class

Take Class Container Class

Frame Class Object Class

Sound Class Container Class

Actor Class Object CLass

Object Appearance Class Event Class

High Order Semantic Class Container Class

Camera Class Object CLass

Camera Use Class Event Class

Lens Class Object Class

Table1:Classes introduced in the new framework in order to implement semantics in multimedia support

propagate semantic entities from the preproduction to the postproduction phase.Table1illustrates a summary of all the classes that we propose in order to assure this connectivity between the pre and the post production phases.

Figure1:The interoperability of all the classes enforces se-mantic entities propagation and exchange in the preproduc-tion phase.

Before going any further in providing the classes’details, we explain the characterization of those classes and their relations(?gure1).Three types of classes are introduced: container,object and event classes.Container classes,as in-dicated by their name,contain other classes.For instance, a movie class contains scenes that in turn contain shots or takes,which in turn contain frames(optionally).This en-capsulation can therefore be very informative in the relation

between semantics characteristics of those classes,because parent classes can propagate semantics to child classes and vice versa.For example,a scene which is identi?ed as a night scene,can propagate this semantic entity to its child classes(Take or Shot).This global approach of semantic en-tities not only facilitates the semantic extraction,but it also gives a research framework in which low-level features can be statistically compared very fast in the semantic informa-tion extraction process.The object oriented interface(OOI) which is applied in this framework provides?exibility in the use and the implementation of algorithms for high seman-tic extraction.On the other hand,object classes are constant physical objects or abstract objects that interact with the me-dia.Any relations of the form“this object interacts with that object”are implemented within this interface.For example a “speci?c actor is playing in a speci?c movie”,or“a speci?c camera is used in this take”,or“a speci?c frame is contained by this take or shot”.Finally,the events classes are used to implement the interaction of the objects with the movie.An example of this is“the speci?c camera object is“panning”on this take”.From the examples we can clearly conclude that high semantics relation can be easily implemented with the use of those classes.More speci?cally:

The Movie Class is the root class of the hierarchy.It con-tains all other classes and describes semantically all the information for a piece of multimedia content.The movie class holds what can be considered as static movie infor-mation,such as crew,actors,cameras,director,title etc.

It also holds the list of the movie’s static scenes where different instances of the Scene class(described later)are stored.Finally,the movie class combines different seg-ments of the static information in order to create different movie versions within the Version class.

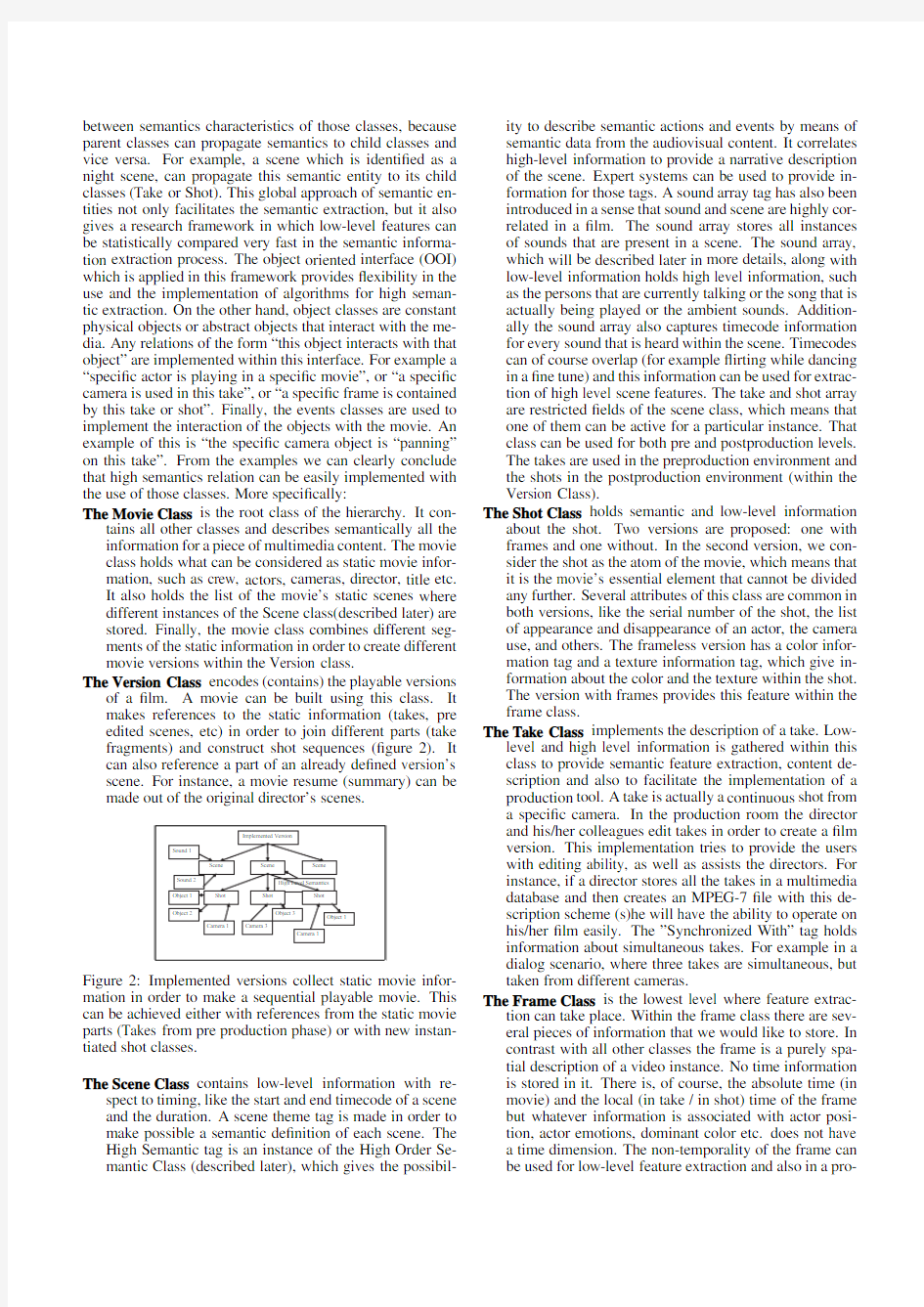

The Version Class encodes(contains)the playable versions of a?lm.A movie can be built using this class.It makes references to the static information(takes,pre edited scenes,etc)in order to join different parts(take fragments)and construct shot sequences(?gure2).It can also reference a part of an already de?ned version’s scene.For instance,a movie resume(summary)can be made out of the original director’s scenes.

Figure2:Implemented versions collect static movie infor-mation in order to make a sequential playable movie.This can be achieved either with references from the static movie parts(Takes from pre production phase)or with new instan-tiated shot classes.

The Scene Class contains low-level information with re-spect to timing,like the start and end timecode of a scene and the duration.A scene theme tag is made in order to make possible a semantic de?nition of each scene.The High Semantic tag is an instance of the High Order Se-mantic Class(described later),which gives the possibil-

ity to describe semantic actions and events by means of semantic data from the audiovisual content.It correlates high-level information to provide a narrative description of the scene.Expert systems can be used to provide in-formation for those tags.A sound array tag has also been introduced in a sense that sound and scene are highly cor-related in a?lm.The sound array stores all instances of sounds that are present in a scene.The sound array, which will be described later in more details,along with low-level information holds high level information,such as the persons that are currently talking or the song that is actually being played or the ambient sounds.Addition-ally the sound array also captures timecode information for every sound that is heard within the scene.Timecodes can of course overlap(for example?irting while dancing in a?ne tune)and this information can be used for extrac-tion of high level scene features.The take and shot array are restricted?elds of the scene class,which means that one of them can be active for a particular instance.That class can be used for both pre and postproduction levels.

The takes are used in the preproduction environment and the shots in the postproduction environment(within the Version Class).

The Shot Class holds semantic and low-level information about the shot.Two versions are proposed:one with frames and one without.In the second version,we con-sider the shot as the atom of the movie,which means that it is the movie’s essential element that cannot be divided any further.Several attributes of this class are common in both versions,like the serial number of the shot,the list of appearance and disappearance of an actor,the camera use,and others.The frameless version has a color infor-mation tag and a texture information tag,which give in-formation about the color and the texture within the shot.

The version with frames provides this feature within the frame class.

The Take Class implements the description of a take.Low-level and high level information is gathered within this class to provide semantic feature extraction,content de-scription and also to facilitate the implementation of a production tool.A take is actually a continuous shot from

a speci?c camera.In the production room the director

and his/her colleagues edit takes in order to create a?lm version.This implementation tries to provide the users with editing ability,as well as assists the directors.For instance,if a director stores all the takes in a multimedia database and then creates an MPEG-7?le with this de-scription scheme(s)he will have the ability to operate on his/her?lm easily.The”Synchronized With”tag holds information about simultaneous takes.For example in a dialog scenario,where three takes are simultaneous,but taken from different cameras.

The Frame Class is the lowest level where feature extrac-tion can take place.Within the frame class there are sev-eral pieces of information that we would like to store.In contrast with all other classes the frame is a purely spa-tial description of a video instance.No time information is stored in it.There is,of course,the absolute time(in movie)and the local(in take/in shot)time of the frame but whatever information is associated with actor posi-tion,actor emotions,dominant color etc.does not have

a time dimension.The non-temporality of the frame can

be used for low-level feature extraction and also in a pro-

Figure3:Frames can be used with Take and Shot classes for a deeper analysis,giving rise to an exponential growth of the MPEG-7?le size.

duction tool for a frame-by-frame editing process(?gure

3).

The Sound Class interfaces all kind of sounds that can ap-pear in multimedia.Speech,music and noise are the ba-sic parts of what can be heard in a movie context.This class holds also the time that a particular sound started and ended within a scene,and attributes that character-ize this sound.The speech tag holds an array of speakers within the scene and also the general speech type(nar-ration,monolog,dialog,etc).The sound class provides useful information for high level feature extraction.

The Actor Class&The Object Appearance Class The actor class implements all the information that is useful to describe an actor and also gives the possibility to seek for one in a database,based on a visual description.

Low-level information for the actor’s interactions with shots or takes is stored in the Object Appearance Class.

This information include the time that the actor enters and leaves the shot.Also,if the actor re-enters the shot several times this class holds a list of time-in and time-out instances in order to handle that.A semantic list of what the actor/object is actually doing in the shot,like if he/she is running or just moving or killing someone,is stored for high-level feature extraction in the High Order Semantic Class.The Motion of the actor/object is held as low-level information.The Key Points List is used to describe any possible known Region Of Interest(ROI), like the bounding box,the convex hull,the features points etc.For instance,this implementation can provide the trajectory of a speci?c ROI like the eye or a lip’s lower edge etc.The pose estimation of the face and the emotions of the actor(with an intensity value)within the shot can also be captured.The latter three tags can be used to create high-level information automatically.

The sound class also holds higher-level information for the speakers and in combining the information we can generate high-level semantics based also in the sound content of the multimedia to provide instantiations of the High Order Semantic Class.The High Order Semantic Class organizes and combines the semantic features,which are derived from the au-diovisual content.For example,if a sequence of actors shaking hands is followed by low volume crowd noise and positives emotions this could probably be a countries leaders handshake.The Object’s Narrative Identi?cation hosts semantics of actors and objects in a scene context, for example(Tom’s Car)and not only the object identi?-cation like(Tom,Car).The Action list refers to actions performed by actors or other objects like“car crashes be-fore a wall”or“actor is eating”.The events list holds information of events that might occur in a scene like“A plane crashes”.Finally,the two semantic tags,Time and Location,de?ne semantics for narrative time(night,day, exact narrative time etc)and narrative location(indoor, outdoor,cityscape,landscape etc).

The Camera&The Camera Use Class The camera class holds all the information of a camera,such as manufac-turer,type,lenses etc.The Camera Use class,which con-tains the camera’s interaction with the?lm.The latter is very useful for low-level and high-level feature extrac-tion from the?lm.The camera motion tag uses a string of characters that conform to the traditional camera mo-tion styles.New styles have no need to re-implement the class.The current zoom factor and lens is used for feature extraction as well.

The Lens Class implements the characteristics of several lenses that will be used in the production of a movie or documentary.It is useful to know and store this informa-tion in order to better extract low-level features from the movie.Also for educational reasons one can search for movies that are recorded with a particular lens.

This OOI essentially constitutes a novel approach of digital video processing.We believe that in a video retrieval applica-tion one can post queries in a form that only simple low-level features cannot answer yet.Also,video annotation has been proven[7]to be non productive,because of the objectivity of the annotators and the time consuming annotation process. The proposed classes have the ability to standardize the an-notation context and they are de?ned in a way that low-level features can

be integrated,in order to enable an automatic ex-traction of those high-level features.In?gure4one can see the relation between semantic space and technical(low-level) features space.The proposed classes are an amalgam of low-level features,like histograms,FFTs etc,and high seman-tic entities,like scene theme,person recognition,emotions, sounds quali?cation,etc.Nowadays researchers are very in-terested in extracting high semantic features[9]-[11].Having this in mind,we believe that the proposed template can give the genre of a new digital video processing approach. Figure4:Low-level features space is captured by human per-ception algorithms and this mechanism will produce the se-mantic content of the frame.

3.EXAMPLES OF USE

Three types of examples,will be provide in this section. These include one for preproduction environment,one for postproduction and the combination of preproduction and postproduction.

?In preproduction environment the produced xml will in-clude a movie class in witch takes,scenes,sound infor-mation and all the static information of the movie will be encapsulated.In Figure5,one can visualize the en-capsulation of those classes.Applications can handle the MPEG-7?le in order to produce helpful tips for the di-rector while he/she is in the editing room or better assist him in editing with smart agents

application.

Figure5:Shots exist only in the post-production phase as fragments of takes from the pre-production phase,and in-herits all semantic and low-level features extracted for the takes in the pre-production phase.This constitutes the seman-tic propagation mechanism.Several versions can be imple-mented without different video data?les.

?In the second type of application all the types of the pull and push applications,as de?ned in the MPEG-7stan-dard[1]can be realized or improved.Retrieval appli-cation will be able to handle in a more uniform way all kinds of request whether the latter are associated with low-level features or high semantic entities.?Finally,the third type of application,is the essential rea-son for implementing such a framework.In such an ap-plication a total interactivity with the user can be estab-lished,by providing even the ability to reediting a?lm and create different?lm version with the same amount of data.For example,by saving the takes of a?lm and altering the editing process,the user can reproduce the ?lm from scratch and with no additional storage of heavy video?les.Creating different permutation arrays of takes and scenes results in different versions of the same?lm.

Nowadays media supports(DVD,VCD,etc)can hold a very large amount of data.Such an implementation can be realistic,if we consider that already produced DVDs provides users something more than just a movie e.g.

(behind the scene tracks,or director’s cuts,actors’inter-views etc).Media Interactivity in media seems to gain a big share in the market,the proposed OOI can provide a very useful product.It is obvious that such a tool can give rise to education programs,interactive television and all the new revolutionary approaches of multimedia world.

4.CONCLUSION AND FURTHER WORK AREAS As a conclusion we underline the fact that this framework can be very useful for the new era of image processing witch is focusing in semantic feature extraction and we believe that it can provide speci?c research goals.Having this in mind,in the future we will concentrate our research in?lling automat-ically the classes’attributes.On going research has already underdevelopment tools for extraction of high semantic fea-ture.

5.ACKNOWLEDGEMENT

The work presented was developed within NM2(New me-dia for a New Millennium),a European Integrated Project (https://www.wendangku.net/doc/ac12404680.html,),funded under the European Com-mission IST FP6programme.

REFERENCES

[1]I.S.O,“Information technology–multimedia content

description interface-part6:Reference software,”, no.ISO/IEC JTC1/SC29N4163,2001.

[2]I.S.O,“Information technology–multimedia content

description interface-part1:Systems,”,no.ISO/IEC JTC1/SC29N4153,2001.

[3]I.S.O,“Information technology–multimedia content

description interface-part2:Description de?nition language,”,no.ISO/IEC JTC1/SC29N4155,2001.

[4]I.S.O,“Information technology–multimedia content

description interface-part3:Visual,”,no.ISO/IEC JTC1/SC29N4157,2001.

[5]I.S.O,“Information technology–multimedia content

description interface-part4:Audio,”,no.ISO/IEC JTC1/SC29N4159,2001.

[6]I.S.O,“Information technology–multimedia content

description interface-part5:Multimedia description schemes,”,no.ISO/IEC JTC1/SC29N4161,2001.

[7]John P.Eakins,“Retrieval of still images by content,”

pp.111–138,2001.

[8]A.Vakali,M.S.Hacid,and A.Elmagarmid,“Mpeg-7

based description schemes for multi-level video content classi?cation,”IVC,vol.22,no.5,pp.367–378,May 2004.

[9]M.Krinidis,G.Stamou,H.Teutsch,S.Spors,N.Niko-

laidis,R.Rabenstein,and I.Pitas,“An audio-visual database for evaluating person tracking algorithms,”in Proc.of IEEE International Conference on Acoustics, Speech and Signal Processing,Philadelphia,USA,18-23March2005,pp.452–455.

[10]J.K.Aggarwal and Q.Cai,“Human motion analysis:

A review,”Computer Vision and Image Understanding,

vol.73,no.3,pp.428–440,1999.

[11]J.J.Wang and S.Singh,“Video analysis of human dy-

namics-a survey,”Real-Time Imaging,vol.9,no.5, pp.321–346,October2003.